Programmers are an obvious choice for members of a software team. However, various points of view attribute different value to the other potential roles.

Matt Heusser‘s “How to Speak to an Agilista (if you absolutely must)” Lightning Talk from CAST 2011 referred to Agilista programmers who rejected the notion that testers are necessary. Matt elaborates that extreme programming began back in 1999 when testing as a field was not as mature, so these developers spoke about only two primary roles, customer and programmer, making an allowance that while the “team may include testers, who help the Customer define the customer acceptance tests. … The best teams have no specialists, only general contributors with special skills.” In general, Agile approaches teams as composed of members who “normally take responsibility for tasks that deliver the functionality an iteration requires. They decide individually how to meet an iteration’s requirements.” The Agile variation Scrum suggests teams of “people with cross-functional skills who do the actual work (analyse, design, develop, test, technical communication, document, etc.). It is recommended that the Team be self-organizing and self-led, but often work with some form of project or team management.” At the time, this prevailing view that the whole team should own quality diminished the significance of a distinct testing role. Now that testing has grown, Matt encourages us to revisit this relationship to find ways to engage world-changers and their methodologies to achieve a better result. Private questioning about the value of the activities that testers do to contribute is more constructive than head-on public confrontation. One source of the problem is that testers need to acknowledge Agile programmer awesomeness and their specific skills. This produces a more favorable environment to discuss the need for testing, which Agilists do see though they want to automate it. Matt advocates observing what works in cross-functional project teams to determine patterns that work in a particular context, engaging our critical thinking skills to develop processes that support better testing. As a process naturalist, Matt reminds us that “[software teams in the wild] may not fit a particular ideology well, but they are important, because by noticing them we can design a software system to have less loss and more self-correction.”

Having not experienced this rejection by Agile zealots for myself despite working only in Agile-friendly software shops, I have struggled with commenting on his suggestions. I decided to dig a bit deeper to find some classic perspectives on roles within software teams so that I can put the XP and Agile assertions into perspective.

One such analogy for the software team is Harlan Mills’ Chief Programmer Team as “a surgical team … one does the cutting and the others give him every support that will enhance his effectiveness and productivity.” In this model, “Few minds are involved in design and construction, yet many hands are brought to bear. … the system is the product of one mind – or at most two, acting uno animo,” referring to the surgeon and copilot roles, which are respectively the primary and secondary programmer. However, this model recognizes a tester as necessary, stating “the specialization of the remainder of the team is the key to its efficiency, for it permits a radically simpler communication pattern among the members,” referring to centralized communication between the surgeon and all other team members, including the tester, administrator, editor, secretaries, program clerk, toolsmith, and language lawyer for a team size of up to ten. This large support staff presupposes that “the Chief Programmer has to be more productive than everyone else on the team put together … in the rare case in which you have a near genius on your staff–one that is dramatically more productive than the average programmer on your staff.”

Acknowledging the earlier paradigm of the CPT, the Pragmatic Programmers assert another well-known approach to team composition: “The [project’s] technical head sets the development philosophy and style, assigns responsibilities to teams, and arbitrates the inevitable ‘discussions’ between people. The technical head also looks constantly at the bigger picture, trying to find any unnecessary commonality between teams that could reduce the orthogonality of the overall effort. The administrative head, or project manager, schedules the resources that the teams need, monitors and reports on progress, and helps decide priorities in terms of business needs. the administrative head might also act as a team’s ambassador when communicating with the outside world.” While these authors do not directly address the role of tester, they state that “Most developers hate testing. They tend to test gently, subconsciously knowing where the code will break and avoiding the weak spots. Pragmatic Programmers are different. We are driven to find our bugs now, so we don’t have to endure the shame of others finding our bugs later.” In contrast, traditional waterfall software teams have individuals “assigned roles based on their job function. You’ll find business analysts, architects, designers, programmers, testers, documenters, and the like. There is an implicit hierarchy here – the closer to the user you’re allowed, the more senior you are.” This juxtaposition sets up a competitive relationship between the roles rather than seeing them as striving toward the same goal.

A healthier model of cross-functional teams communicates that all of the necesary skills “are not easily found combined in one person: Test Know How, UX/Design CSS, Programming, and Product Development / Management.” This view advocates reducing communication overhead by involving all of the relevant perspectives within the team environment rather than segregating them by job function. Here, a tester role “works with product manager to determine acceptance tests, writes automatic acceptance tests, [executes] exploratory testing, helps developers with their tests, and keeps the testing focus.”

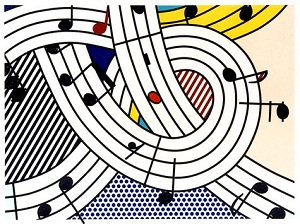

Finally, we have approached my favorite description of a collaborative software team as found in Peopleware: “the musical ensemble would have been a happier metaphor for what we are trying to do in well-jelled work groups” since “a choir or glee club makes an almost perfect linkage between the success or failure of the individual and that of the group. (You’ll never have people congratulating you on singing your part perfectly while the choir as a whole sings off-key.)” When we think of our team as producing music together, we see that this group composed of disparate parts must together be responsible for the quality of the result, allowing for a separate testing role but not reserving a testing perspective to that one individual. All team members must pursue a quality outcome, rather than only the customer and the programmer, as Agile purists would have it. One aspect of this committed team is willingness to contend respectfully with one another, for we would readily ignore others whose perspectives had no value. Yet, when we see that all of our striving contributes to the good of the whole, the struggle toward understanding and consensus encourages us to embrace even the brief discomfort of disagreement.

Image Credit

CAST is online streaming the keynotes and Emerging Topics track again this year.

CAST is online streaming the keynotes and Emerging Topics track again this year.